Prerequisites

An Active Azure Subscription

Java Development Kit

Apache Maven

Azure Functions

Azure Functions, is an event driven serverless compute solution that allows developers to run blocks of code (function) in Azure. Being a serverless solution, Azure Functions facilitates users to focus on the functionality rather than infrastructure in which the code gets deployed. From costs perspective, users needs to pay only for the compute resources required to execute the code. This consumption model saves costs by only using compute resources when required.

Azure Functions provides compute-on-demand which enables a block of code deployed in Azure Functions as function that can be executed anytime when the configured event occurs. Azure Functions enables deployed functions to scale up and scale down the resources and function instances as needed.

Use case

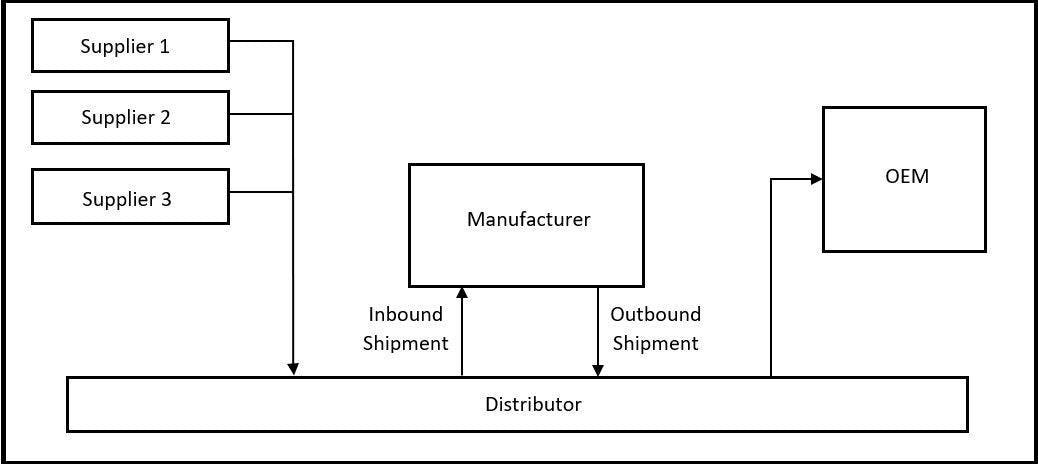

An automotive component manufacturer, who procures raw material from suppliers, manufactures and ships the finished products to OEMs. Diagram 1.1 shows the supply chain for the same.

Diagram 1.1. Supply Chain Diagram

Diagram 1.1. Supply Chain Diagram

Manufacturer does the inbound and outbound transactions on fortnight basis. Manufacturer uses an internal application to manage their inventory, warehouse, suppliers, purchase, order and this application is managed by the their IT team.

During the day-to-day operations, multiple invoices are received/generated and these invoices are dumped to a common storage location. This leads to huge pile of invoice accumulation which is difficult to manage and/or organise over a period of time.

As an automation initiative, the IT team wants to automate their invoice management and a first step forward it is decided to automate the invoice segregation based on three categories such as Sales Invoice, Purchase Invoice, Travel Invoice.

Architecture

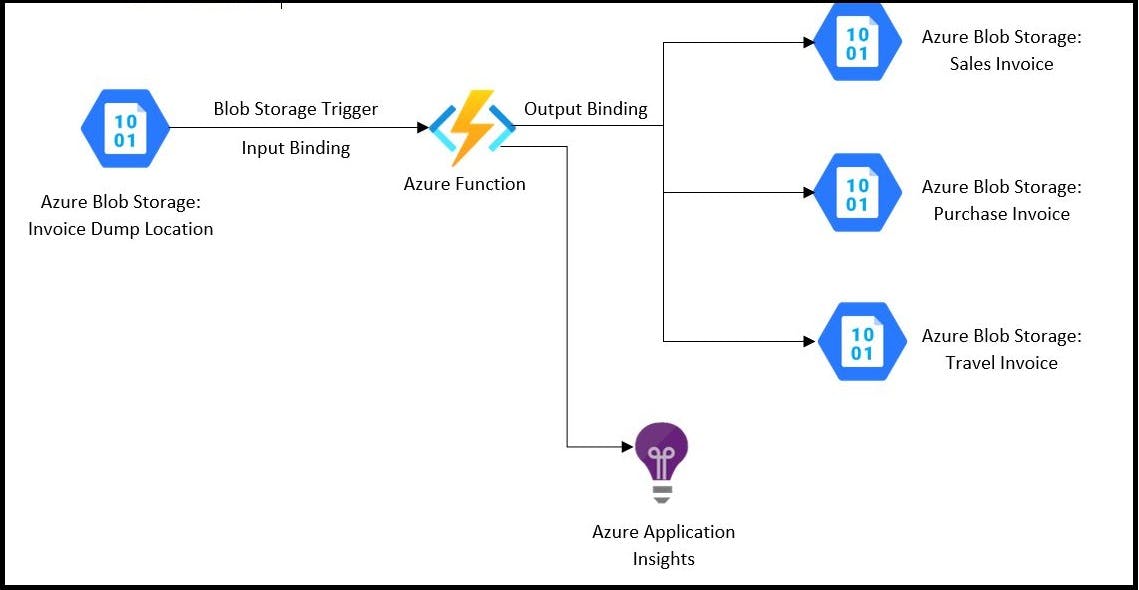

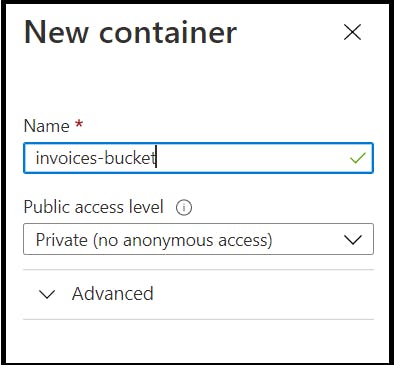

Diagram 1.2. Architecture for Blob Processing

Diagram 1.2. Architecture for Blob Processing

Blob Storage Trigger (direction:

in): The Blob storage trigger starts a function when a new or updated blob is detected.Input Binding (direction:

in): The contents of the blob are provided as input to the functions.Output Binding (direction:

out): Creates new blob and copy the contents of bob received from input binding.

- Azure Functions: Process blobs based on the invoice file name as shown below,

Sales Invoice: PY-SALINV-<Batch.No>-<Date>-<Invoice.No>

Purchase Invoice: PY-PURINV-<Batch.No>-<Date>-<Invoice.No>

Travel Invoice: PY-TRAINV-<Batch.No>-<Date>-<Invoice.No>

Creating Azure Functions in Portal

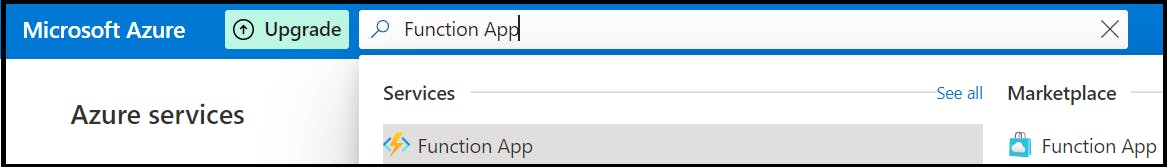

Step1: Search for Function App from the search window in Azure portal

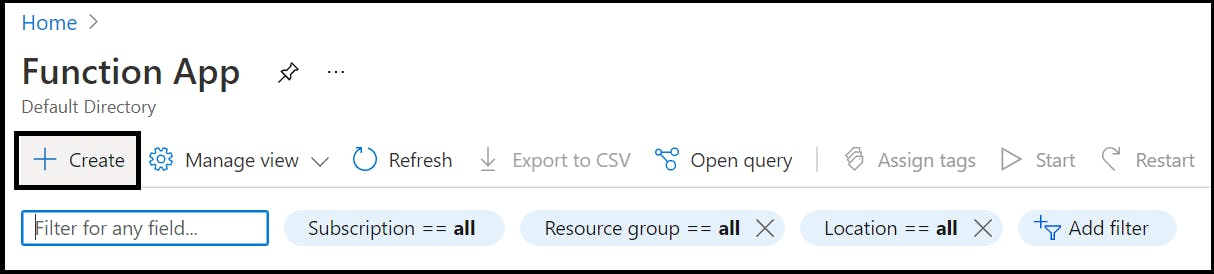

Step2: Click on Create to create new instance of Azure Functions

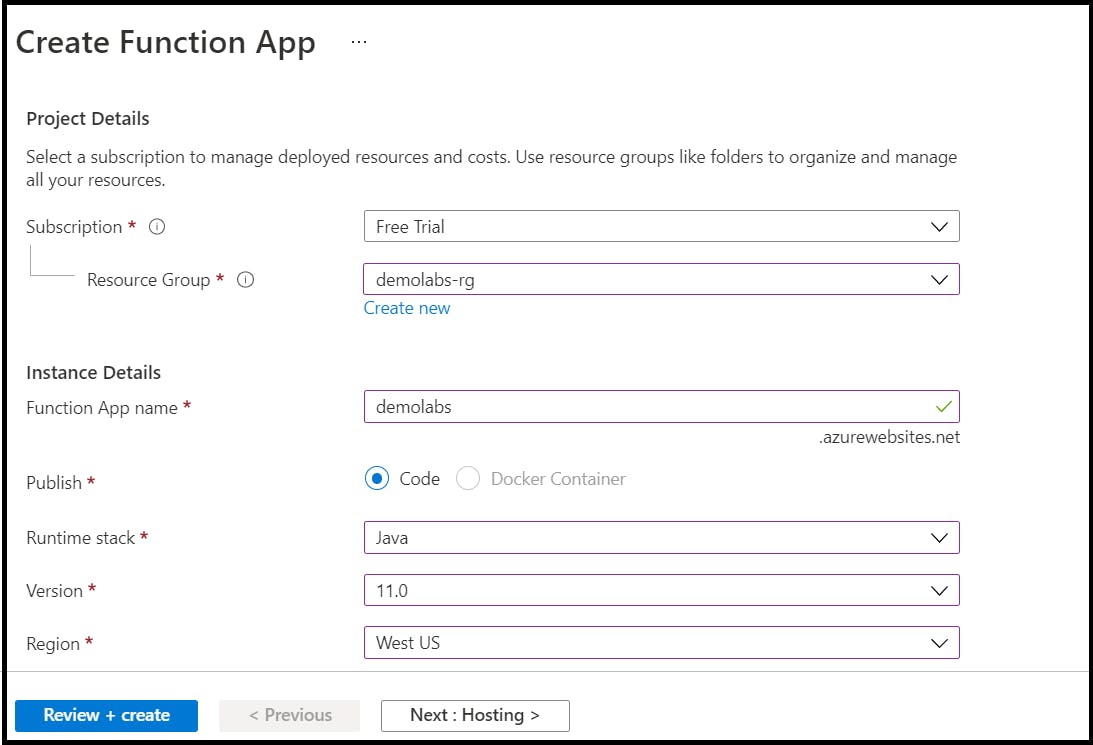

Step3: From the Basics tab, provide the necessary data as

Basics

Subscription

Resource Group: Select from existing resource group or create new resource group

Function App Name: Name of the Azure Functions

Publish: Deploy by code or Docker container

Runtime Stack: Runtime stack i.e., Java/Node.js/Python/Powershell Core/Custom Handler

Version

Region: Location in which Azure Functions is desired to be created

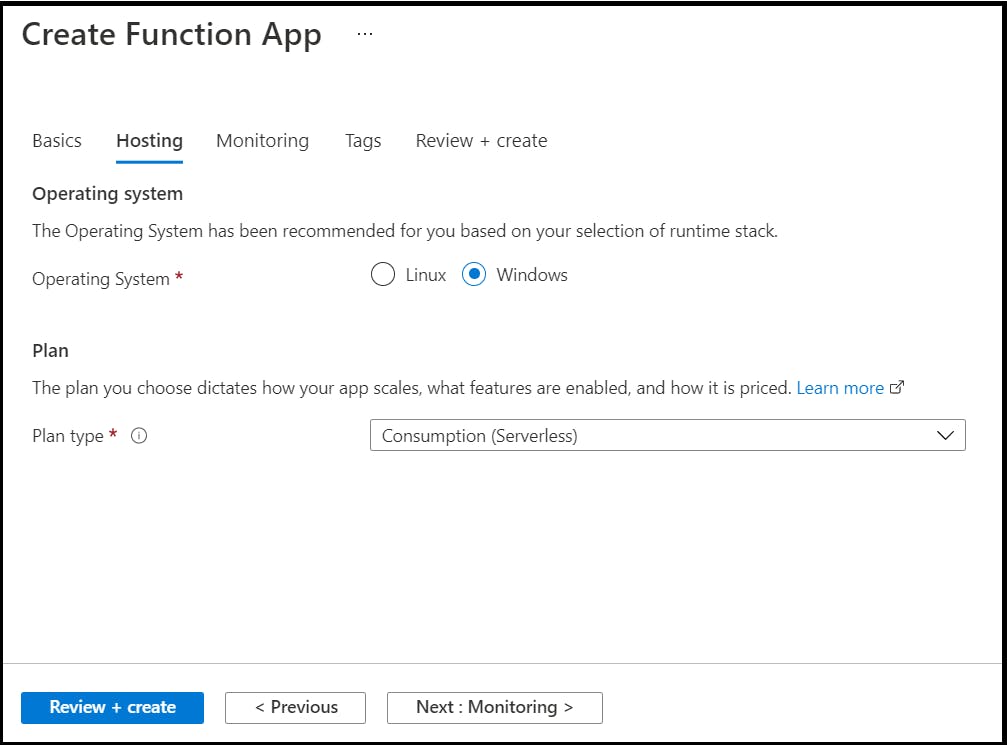

Hosting

Operating System

Plan: Consumption (Serverless)/Functions Premium/App Service Plan

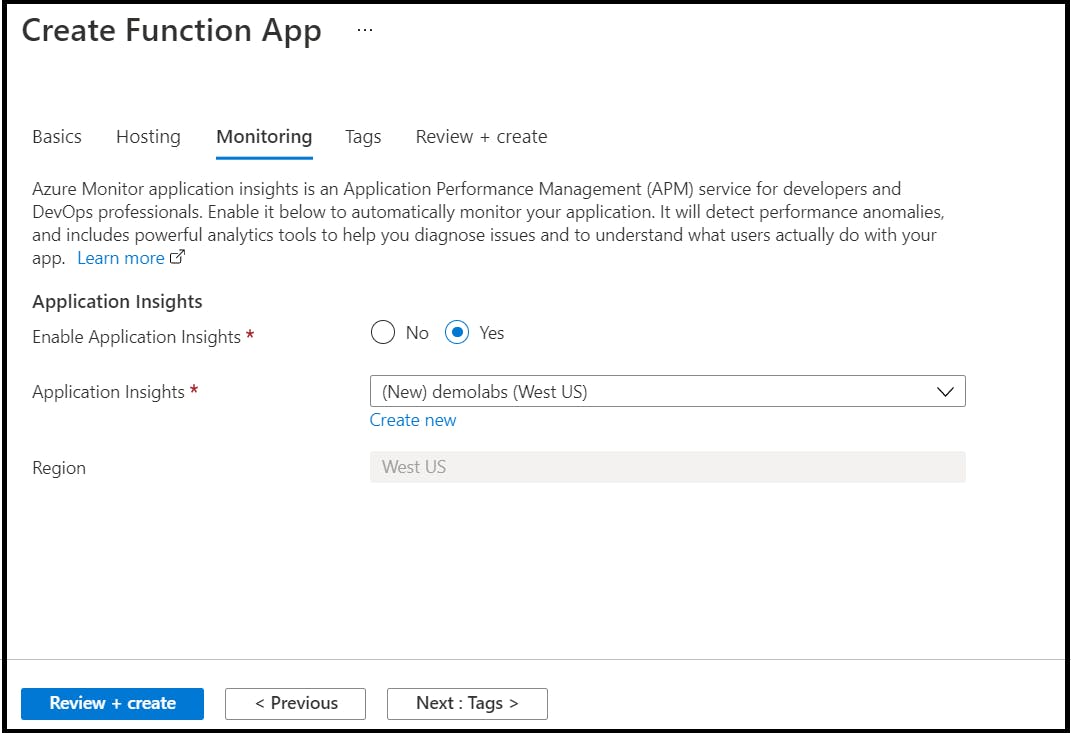

Monitoring

Enable Application Insights: Yes, to enable log monitoring of Azure Functions

Application Insights: New application insights service

Region: Location in which the service is desired to be spawned upon

Step4: Click on Review+Create to review the service creation and create the deployment of service

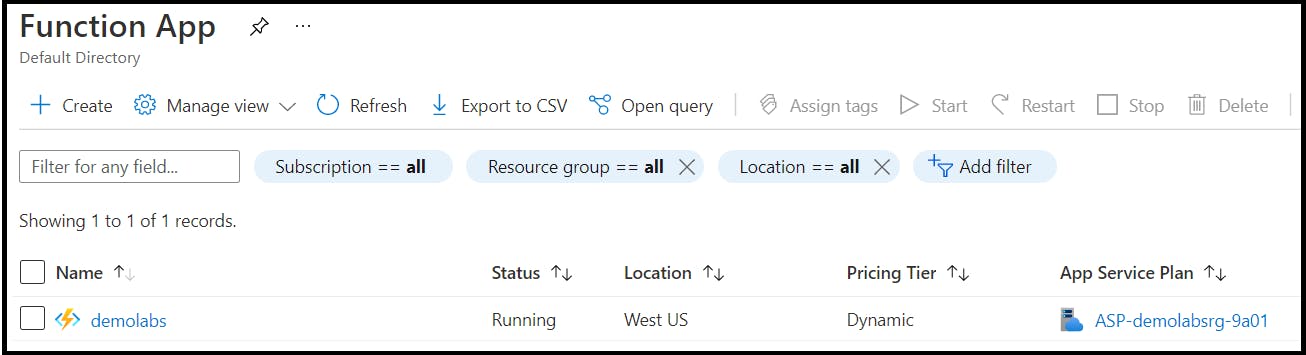

Once deployment is successful, demolabs Azure Functions is created and running with configured App Service Plan

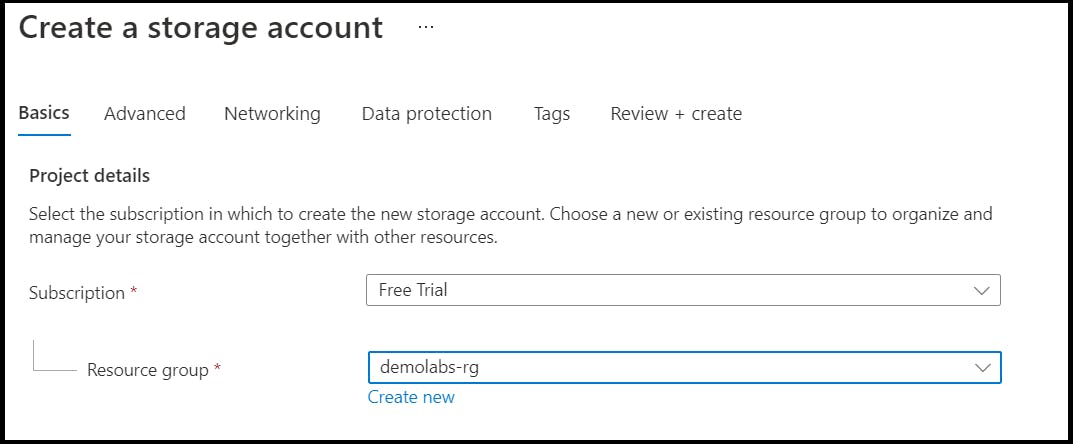

Create Azure Storage Account and Blob in Portal

Step1: Search for Storage Account from the search window in Azure portal

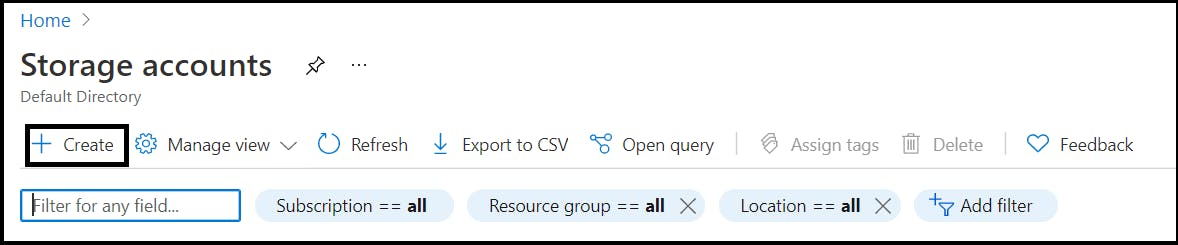

Step2: Click on Create to create new storage account instance

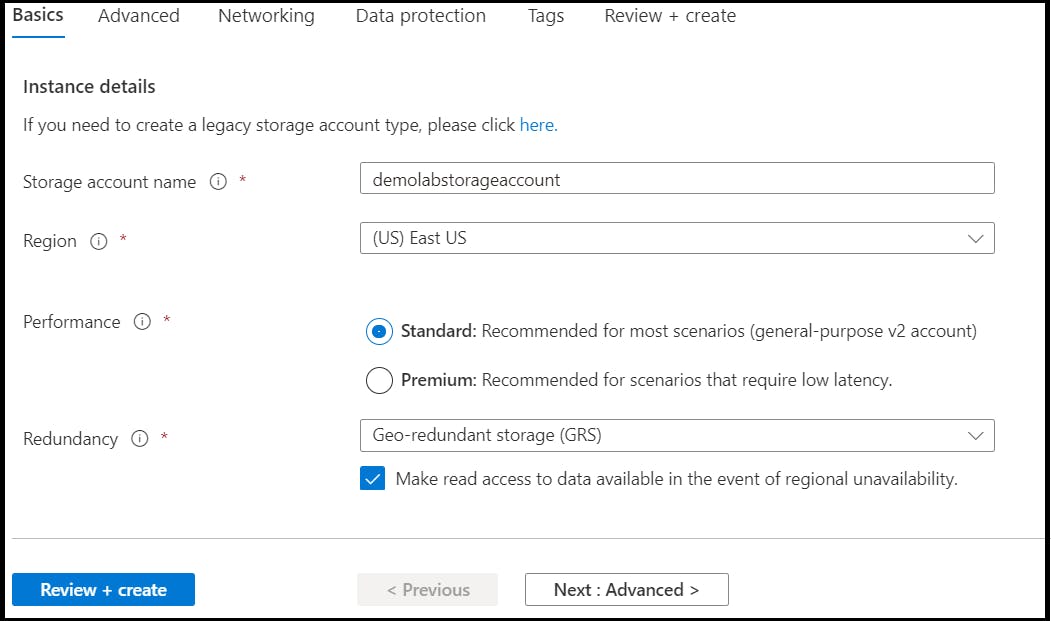

Step3: From Basics section, provide necessary details

Subscription

Resource Group: Select existing resource group or create new resource group

Storage account name: Storage account service name

Region: Region in which the Azure Storage account is desired to be created

Performance: Standard/Premium

Redundancy: GRS/LRS/ZRS/GZRS

Sections such as Advanced, Networking and Data protections are left to default values for this POC.

Step4: Once all details are provided, click on Review+Create to complete the deployment of service.

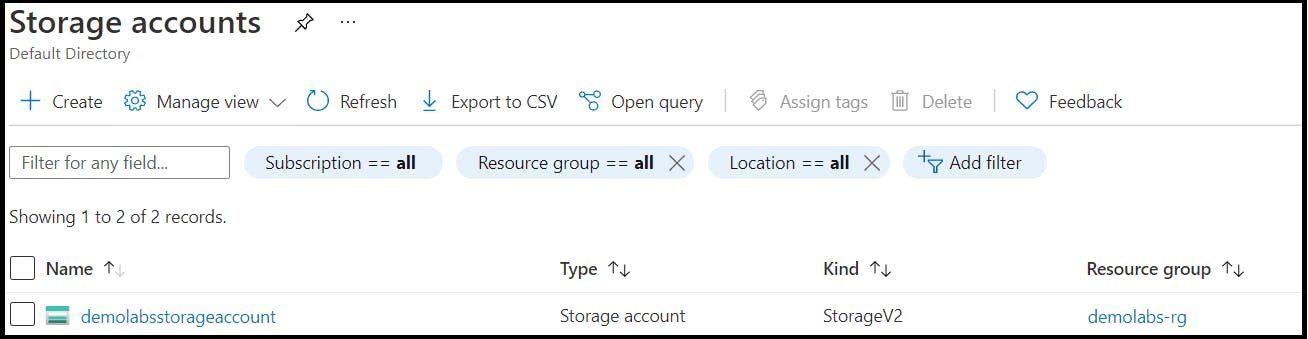

The storage account demolabsstorageaccount is created

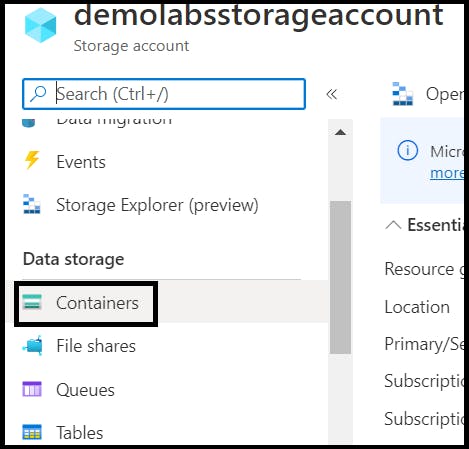

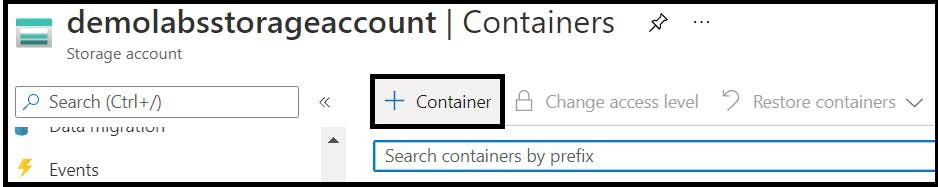

Step5: Navigate to demolabsstorageaccount and click on Container to create new container

Step 6: Provide the name of the container to be created. In this article, three containers are created with name

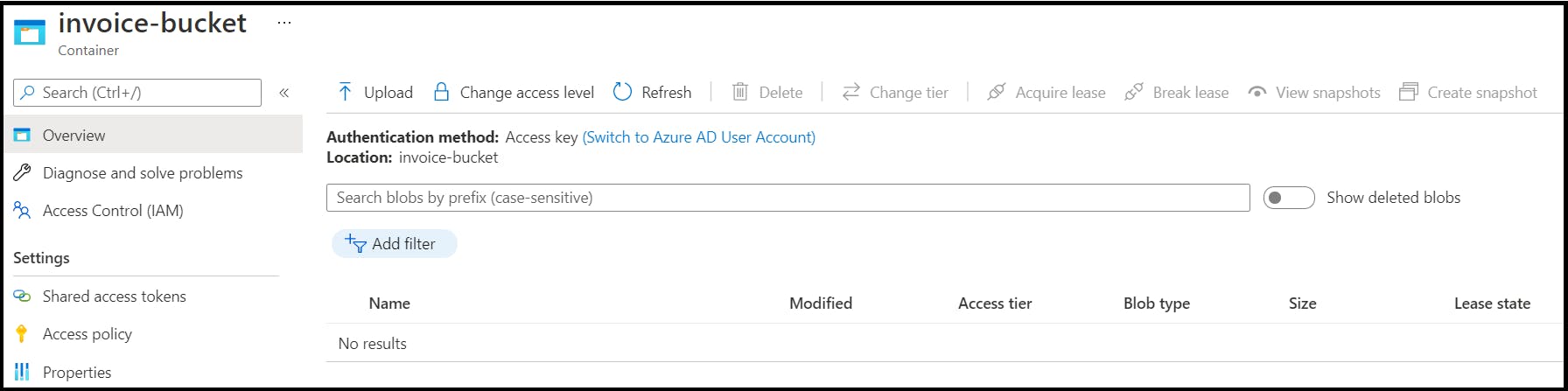

invoice-bucket: A common storage place to dump all the invoices (Sales, Purchase, Travel)

purchase: Place to store purchase invoices

sales: Place to store sales invoices

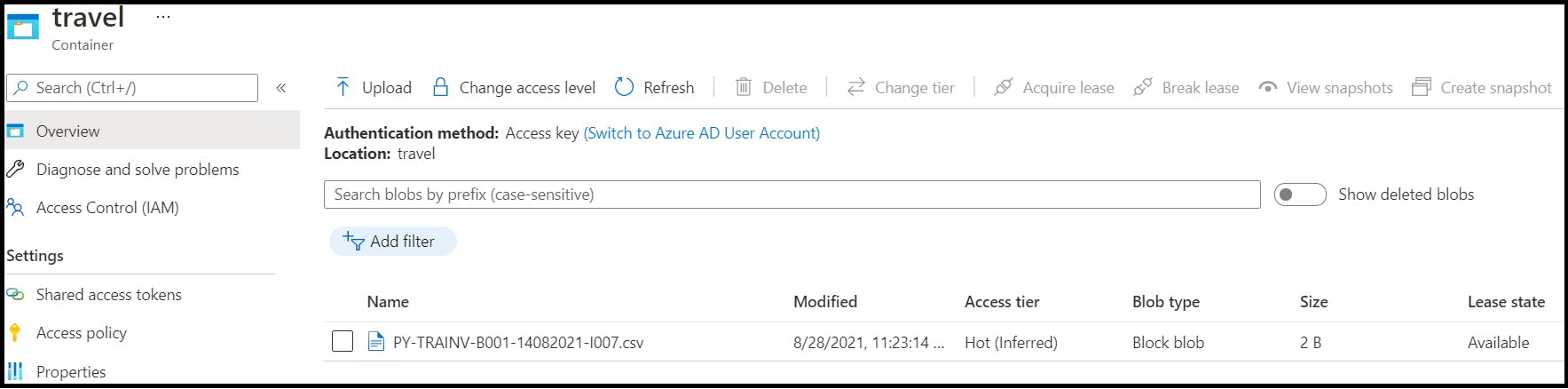

travel: Place to store travel invoices

Manage Azure Storage Blobs using Azure Functions (Java)

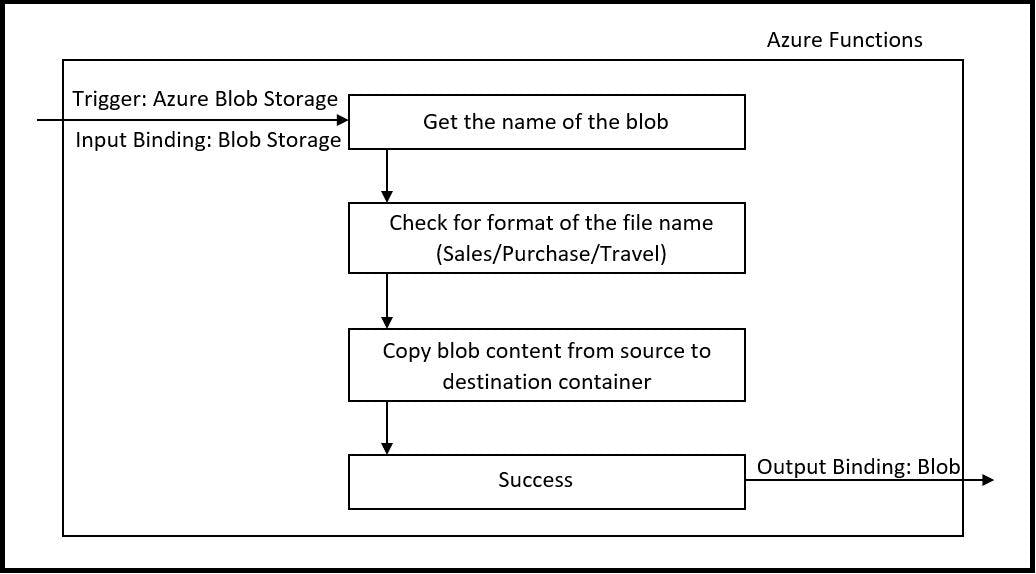

Flow Diagram: Trigger, Input Binding, Output Binding

Diagram 1.3. Flow Diagram

Dependencies

Dependencies to be imported in Spring Boot project to work with Storage account and Azure Functions

<dependency>

<groupId>com.microsoft.azure.functions</groupId>

<artifactId>azure-functions-java-library</artifactId>

<version>${azure.functions.java.library.version}</version>

</dependency>

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-storage-blob</artifactId>

<version>12.12.0</version>

<!-- Exclusion added, to resolve runtime error -->

<exclusions>

<exclusion>

<groupId>com.azure</groupId>

<artifactId>azure-core-http-netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Added HTTP dependency since http-netty dependency is removed above due to run time error -->

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-core-http-okhttp</artifactId>

<version>1.2.1</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.5</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-simple</artifactId>

<version>1.6.4</version>

</dependency>

In pom.xml, provide the below details

functionAppName

resourceGroup

appServicePlanName

region

runtime

<configuration>

<!-- function app name -->

<appName>demolabs</appName>

<!-- function app resource group -->

<resourceGroup>demolabs-rg</resourceGroup>

<!-- function app service plan name -->

<appServicePlanName>ASP-demolabsrg-9a01</appServicePlanName>

<!-- function app region-->

<!-- refers https://github.com/microsoft/azure-maven-plugins/wiki/Azure-Functions:-Configuration-Details#supported-regions for all valid values -->

<region>westus</region>

<!-- function pricingTier, default to be consumption if not specified -->

<!-- refers https://github.com/microsoft/azure-maven-plugins/wiki/Azure-Functions:-Configuration-Details#supported-pricing-tiers for all valid values -->

<!-- <pricingTier></pricingTier> -->

<!-- Whether to disable application insights, default is false -->

<!-- refers https://github.com/microsoft/azure-maven-plugins/wiki/Azure-Functions:-Configuration-Details for all valid configurations for application insights-->

<!-- <disableAppInsights></disableAppInsights> -->

<runtime>

<!-- runtime os, could be windows, linux or docker-->

<os>windows</os>

<javaVersion>8</javaVersion>

</runtime>

<appSettings>

<property>

<name>FUNCTIONS_EXTENSION_VERSION</name>

<value>~3</value>

</property>

</appSettings>

</configuration>

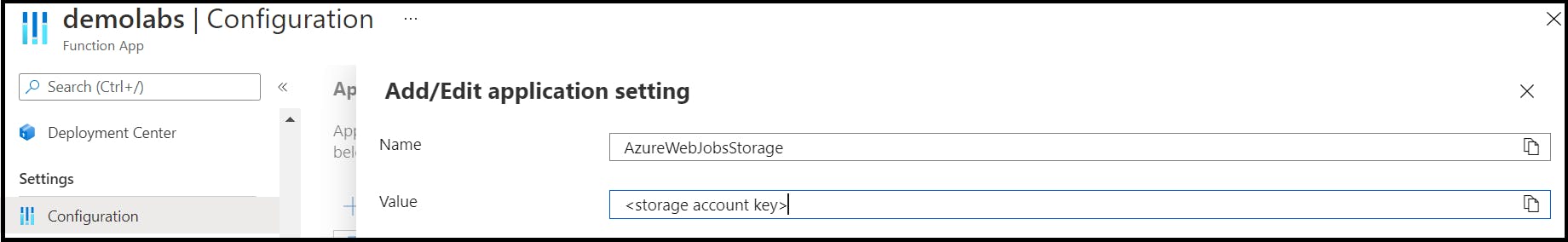

Configure Storage Connection String

For Local development, add the connection string in local.setting.json with key AzureWebJobsStorage and value as the storage account key

In portal, navigate to Azure Function demolabs and under Configuration section add the storage account connection string with name AzureWebJobsStorage and value as the connection string (The Azure Storage account connection string can be accessed from storage account’s access key)

Get Container Reference

Manage containers in storage account by getting the container reference. Method getContainerReference is used to do the same

/**

* To get target container reference object

*

* @param destinationContainerName Target container name to copy blobs from source container

* @return BlobContainerClient object of the target container

*/

public BlobContainerClient getContainerReference(String destinationContainerName) {

String connectionString= System.getenv(CONNECTION_NAME);

BlobServiceClient blobServiceClient = new BlobServiceClientBuilder().connectionString(connectionString).buildClient();

BlobContainerClient containerReference= blobServiceClient.getBlobContainerClient(destinationContainerName);

return containerReference;

}

Create new Blob

The method uploadBlob is used to create new blob from function.

/**

* Create a new blob to the target container

*

* @param containerName Target container to create new blob

* @param fileName Blob name

* @param content blob content

*/

public void uploadBlob(String containerName, String fileName, byte[] content) throws IOException {

BlobContainerClient container= this.getContainerReference(containerName);

BlobClient blob= container.getBlobClient(fileName+CSV);

InputStream inputContent= new ByteArrayInputStream(content);

blob.upload(inputContent, inputContent.available());

}

Validate Blob Creation

- The method blobExists helps to find out whether the blob exists in the Azure Storage account container

/**

* Check blob exists or not

*

* @param containerName Target container

* @param fileName Blob name

* @return true/false flag

*/

public boolean blobExists(String containerName, String fileName) {

BlobContainerClient container= this.getContainerReference(containerName);

BlobClient blob= container.getBlobClient(fileName+CSV);

return blob.exists();

}

Delete Blob

- The method deleteBlob is used to delete an existing blob from storage account container

/**

* Delete a blob from the given container

*

* @param containerName Target container

* @param fileName Blob name to delete

*/

public void deleteBlob(String containerName, String fileName) {

BlobContainerClient container= this.getContainerReference(containerName);

BlobClient blob= container.getBlobClient(fileName+CSV);

blob.delete();

}

Blob Processing Based on Use case

Method

run(@BlobTrigger, @BindingName, ExecutionContext)is the main entry point for this Azure Function. The name of this function is described using@FunctionNameannotation.@Blobtriggerfor the container invoice-bucket is configured. This configuration will invoke the Azure Function, whenever a new blob is created in the specified container.@BindingNameis used to bind the newly uploaded blob name

/**

* Blob trigger function to copy blobs to respective invoice containers.

*/

@FunctionName("blobprocessor")

public void run(@BlobTrigger(name = "file", dataType = "binary", path = "invoice-bucket/{name}.csv",

connection = CONNECTION_NAME) byte[] content,

@BindingName("name") String filename,

final ExecutionContext context)

{

try {

if(filename.startsWith(SALES_INVOICE)) {

this.uploadBlob(SALES, filename, content);

if(this.blobExists(SALES, filename)) {

context.getLogger().info("Blob upload success for blob "+filename+".csv to container "+SALES);

this.deleteBlob(INVOICE_BUCKET, filename);

}

else

context.getLogger().warning("Blob upload failed for blob "+filename+".csv to container "+SALES);

}

else if(filename.startsWith(PURCHASE_INVOICE)) {

this.uploadBlob(PURCHASE, filename, content);

if(this.blobExists(PURCHASE, filename)) {

context.getLogger().info("Blob upload success for blob "+filename+".csv to container "+PURCHASE);

this.deleteBlob(INVOICE_BUCKET, filename);

}

else

context.getLogger().warning("Blob upload failed for blob "+filename+".csv to container "+PURCHASE);

}

else if(filename.startsWith(TRAVEL_INVOICE)) {

this.uploadBlob(TRAVEL, filename, content);

if(this.blobExists(TRAVEL, filename)) {

context.getLogger().info("Blob upload success for blob "+filename+".csv to container "+TRAVEL);

this.deleteBlob(INVOICE_BUCKET, filename);

}

else

context.getLogger().warning("Blob upload failed for blob "+filename+".csv to container "+TRAVEL);

}

else {

context.getLogger().warning("Incorrect filename. Please check the filename adhered to standards");

}

}catch(Exception exception) {

exception.printStackTrace();

exception.getMessage();

}

}

From the above code snippet, the

tryandcatchblock holds the logic to segregate invoices based on invoice category.If the newly uploaded blob does not have the any one of the three categories, then an

Warningwill get logged with a messageIncorrect filename. Please check the filename adhered to standardsConstants used in this function

private static final String CONNECTION_NAME="AzureWebJobsStorage";

private static final String CSV= ".csv";

private static final String SALES= "sales";

private static final String PURCHASE= "purchase";

private static final String TRAVEL= "travel";

private static final String INVOICE_BUCKET= "invoice-bucket";

private static final String SALES_INVOICE= "PY-SALINV";

private static final String PURCHASE_INVOICE= "PY-PURINV";

private static final String TRAVEL_INVOICE= "PY-TRAINV";

Build, Run and Deploy Azure Function

Step1: Login to Azure using below command

az login

Step2: Build code locally

mvn clean package

Step3: Once build is success, run the code locally

mvn azure-functions:run

Step4: After test and sanity check in local, deploy the Azure Function using the below command

mvn azure-functions:deploy

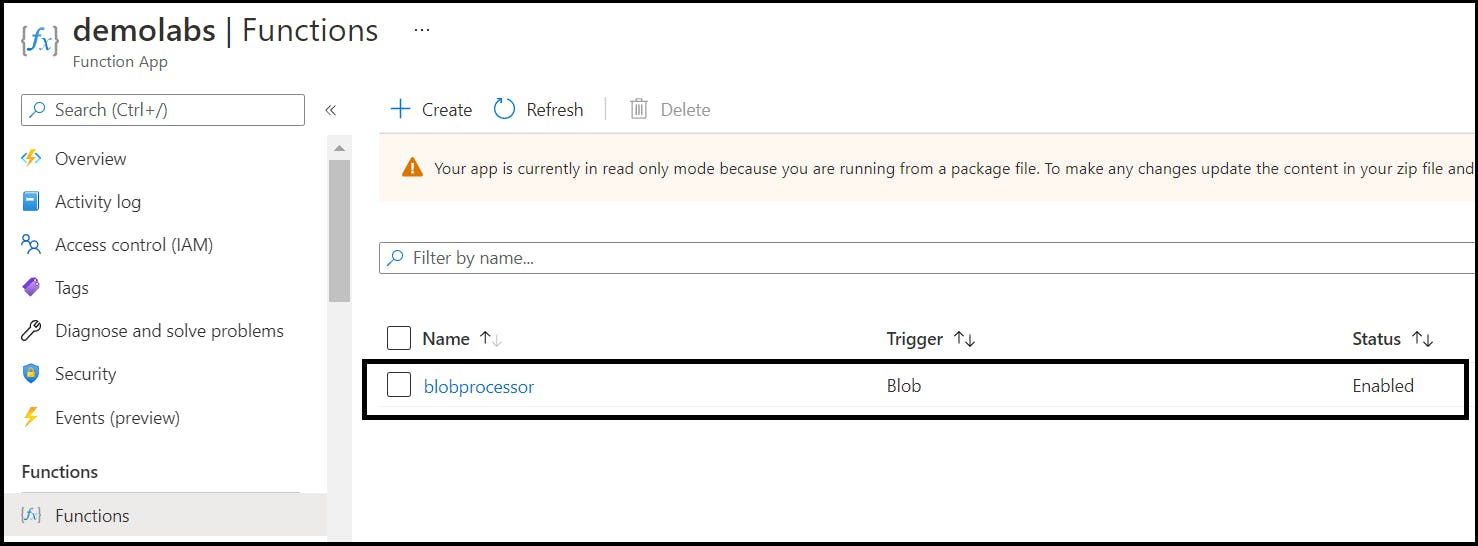

Once deployed successfully, navigate to the Function App demolabs and under Functions, a new function with name blobprocessor got created

End-to-End Flow

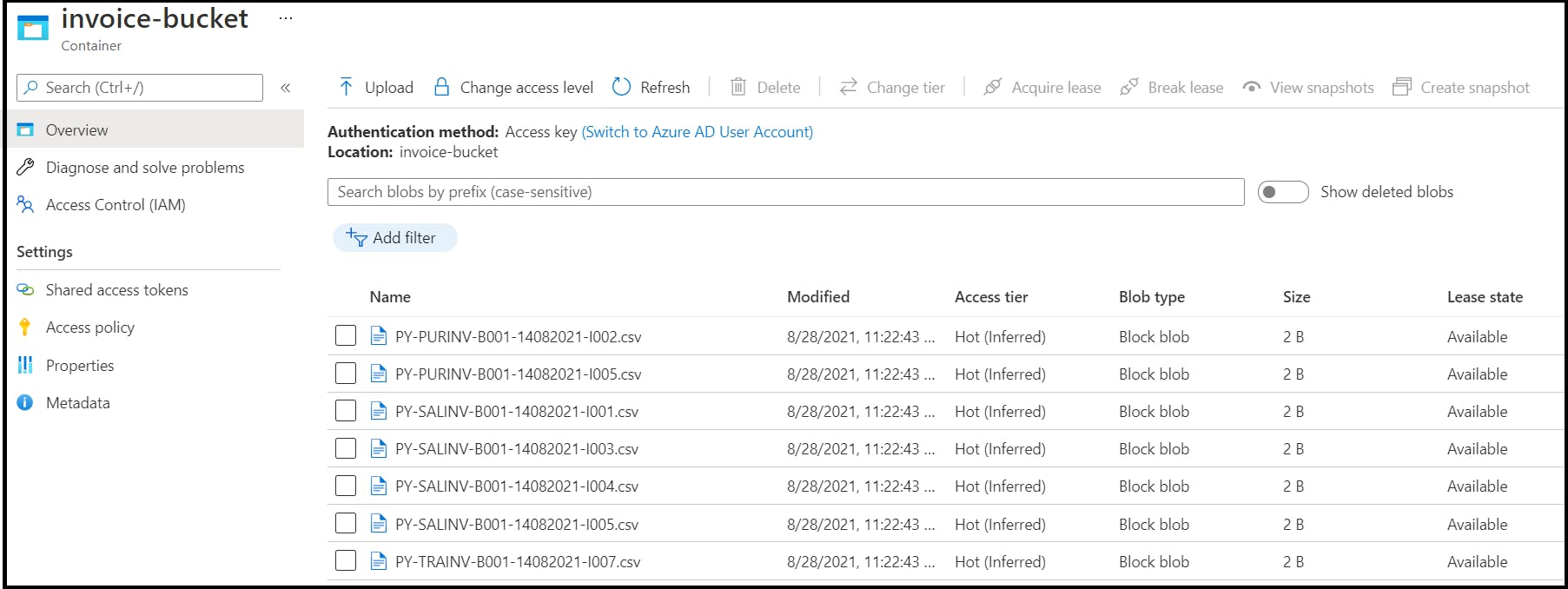

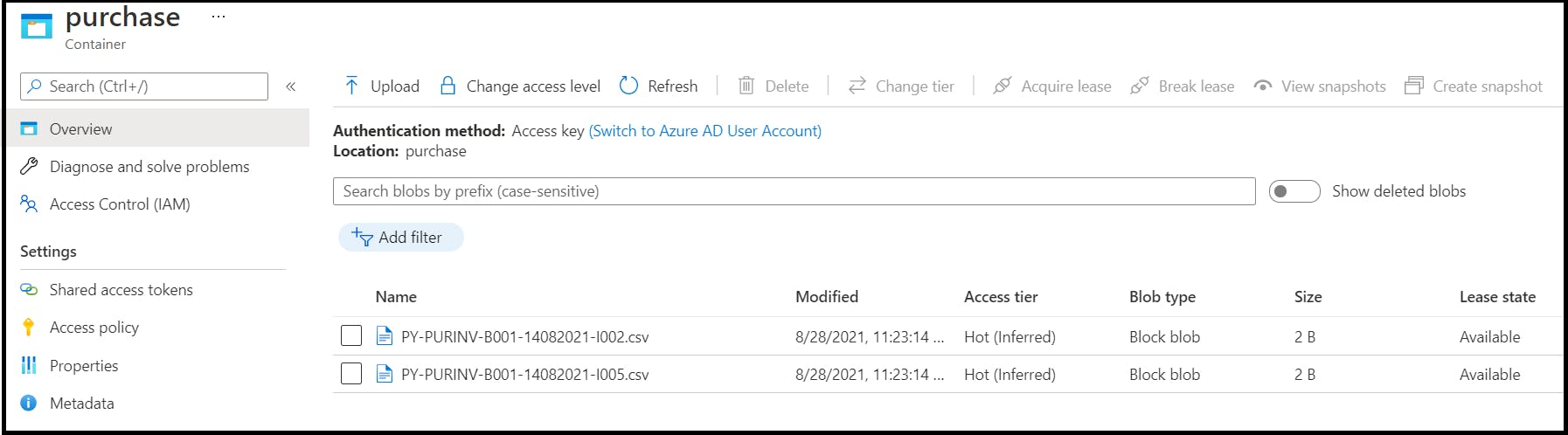

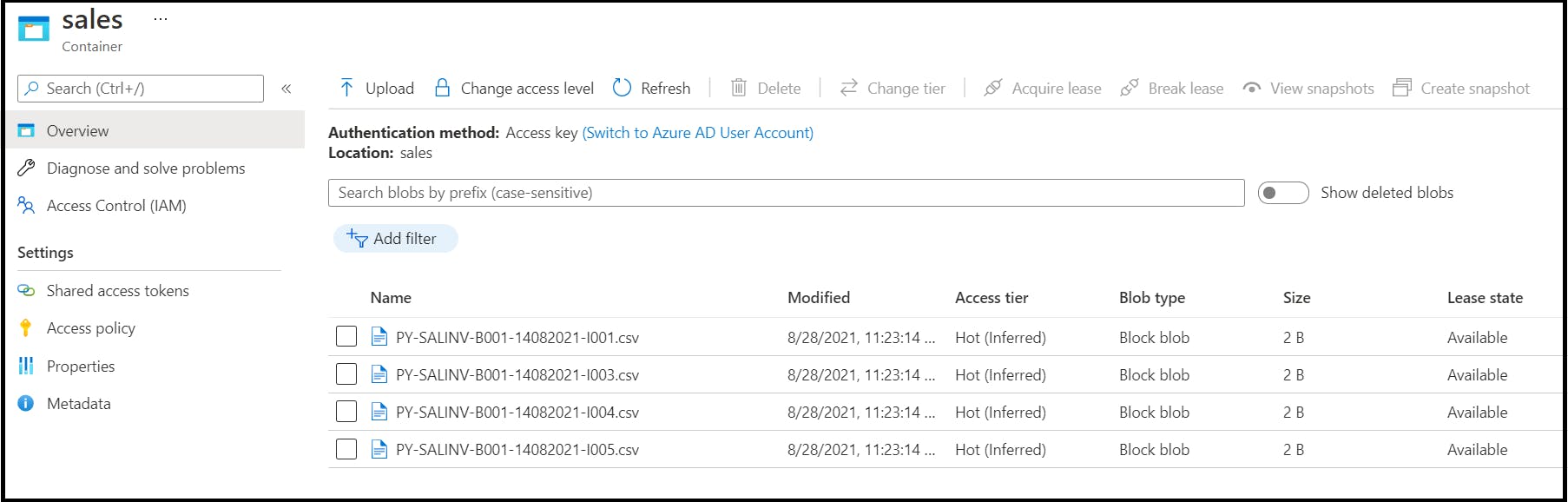

In Azure Storage Account, uploading Purchase, Sales and Travel invoices to invoice-bucket container. Once uploaded, Azure Functions will be invoked and the invoices will be moved to respective category’s container i.e., purchase, sales and travel

Step1: Uploading 7 invoices. Purchase invoice (2 nos), Sales invoice (4 nos), Travel invoice (1 no).

Once uploaded, Azure Function gets invoked and the invoices are copied to respective containers

Purchase Container:

Sales Container:

Travel Container:

After all the blobs got processed and moved to the respective containers, the invoices from invoice-bucket is cleaned (as this is a redundant copy of invoices)